www.ptreview.co.uk

15

'24

Written on Modified on

CloudFest 2024: Revolutionizing server performance for AI innovation

Samsung Semiconductor team was excited to attend, and to showcase the very latest memory solutions designed to support the extreme pace of AI innovation.

www.samsung.com

Each year, CloudFest brings together more than 8,000 cloud computing professionals to discuss new innovations and the future of cloud technology. This year marked the event’s 20th anniversary and explored the role of artificial intelligence in revolutionizing the cloud computing space as a leader in AI innovation.As well as showcasing the latest DDR5 RDIMM & PCIe Gen5 SSD server products at the Samsung booth, Changwoo Sun, Head of Storage New Biz Group at Samsung Semiconductor, was also invited to the stage to outline Samsung Semiconductor’s vision for memory in the age of AI. Let’s take a deeper look at how Samsung’s memory solutions will help drive future innovations to support and define the AI era.

The link between memory and AI

The pace of AI innovation is rapidly increasing. The number of parameters in large transformer models has been exponentially increasing by 410x every two years a growth rate which has the potential to accelerate even further in future. In contrast, single GPU memory is only growing at a rate of x2 every two years. The risk of this divide is an increase in latency, something that could seriously undermine the power of AI. In applications where continuous data accumulation occurs, high-performance storage becomes essential in securing low latency. At the same time, research shows that the number of parameters a GPU can process will increase steeply if additional system memory is applied. The industry now realizes that more memory means better overall performance in AI and machine learning.

Barriers to progress

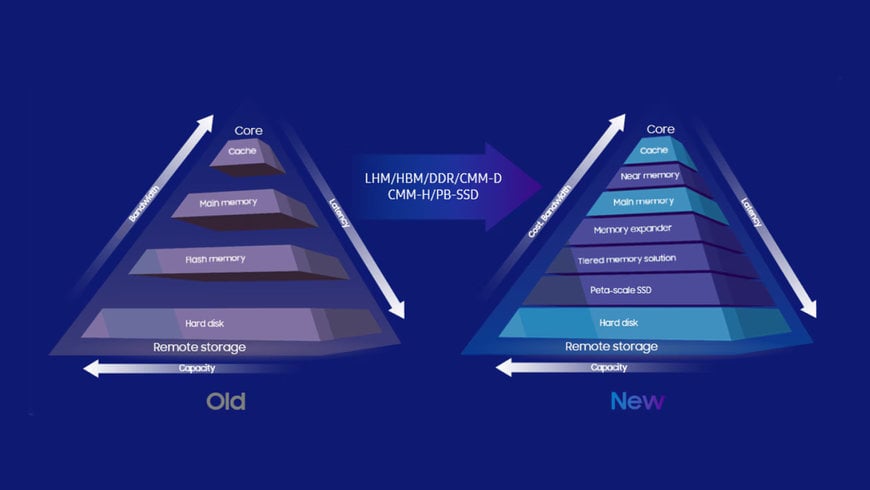

As the industry rapidly heads toward the limits of nanotechnology, semiconductors are facing several barriers to progress, specifically: latency, power, bandwidth, and capacity. These elements are all connected if someone wants to improve one, the others must be sacrificed. Until now, these trade-offs have been overcome by shrinkage, new materials, and new technologies. But, as the industry gets closer to single-digit design rules, there is very little room for improvement. One solution to this issue is making the most of what is already available utilizing memory beyond GPUs. But while that addresses the issue, it doesn’t eliminate the problem entirely. Since the amount of memory is limited by CPU capacity, memory remains fully dependent on the existing CPU architecture, the same old system architecture that Von Neumann defined nearly 80 years ago. To address this, it will be necessary to leverage Compute Express Link technology and adopt more fine-tuned tiering solutions. By developing a deep and efficient memory hierarchy with this sort of CXL-based approach, system designers can develop a more heterogeneous memory-centric architecture. Ultimately, that means improved flexibility and better total cost of ownership management. To achieve this goal, Samsung Semiconductor offers a number of unique technology solutions.

CMM-D technology for the AI era

CMM-D is based on Samsung's DRAM technology and integrates the CXL open standard interface developed through the CXL consortium. The technology is a high-speed, low-latency CPU-to-device interconnect technology built on the PCIe physical layer, providing efficient connectivity between the host CPU and connected devices such as accelerators and memory expansion products. Traditionally, adding memory capacity to a system involves increasing the number of native CPU memory channels. But a CXL Type 3 memory expansion doesn’t require these new channels. Samsung introduced this type of device at this CloudFest, providing a flexible and powerful option to increase memory capacity and bandwidth without increasing the number of primary CPU memory channels. Additionally, CMM-D also provides memory coherency. One of the most important features of CXL technology is that it maintains memory coherence between the directly-attached CPU memory and the memory on the CXL device, meaning that both the host and the CXL device see the same data. The CXL 2.0 specification also supports single-level switching and memory pooling. This increases the overall efficiency of a system by allowing dynamic allocation and deallocation of memory resources. This approach enables the CXL host and CXL device to work on shared data, guaranteeing they see the same copy of a given memory location. The home agent does not allow simultaneous changes on the data, so once a change is made either by the host or the attached device, all copies of the data remain consistent. This also enables reduction of stranded memory, a common problem observed in server systems.

CMM-H: Rethinking storage for memory-centric computing

In addition to the CMM-D, CloudFest also saw Samsung Semiconductor discuss the latest flash version of CXL the CMM-H. The CMM-H provides a more efficient way to move data between memory and storage, supporting fine-grained access to AI and ML models within storage applications, featuring internal DRAM and NAND memory. Samsung recently utilized this device to develop a DLRM application for a customer. Thanks to the DRAM caching capability, Samsung was able to achieve a significantly higher throughput than SSDs. This has the potential to significantly reduce the customer’s TCO and improve their overall efficiency and performance. According to the latest results, this throughput can be up to seven times higher than that of conventional SSDs.

Peta-scale storage via PBSSD

The last memory product discussed at CloudFest was peta-scale storage, offered in the form of the PBSSD. With data forming the backbone of AI and ML, Samsung Semiconductor has been putting its Flash expertise to work at the storage sub-system level. To do that, there has been continued innovation at the SSD level, pushing down media costs while increasing density. Additionally, Samsung has developed a group of system-level firmware features to optimize storage OpEx. These features include workload-adaptive power management (saving up to 20% power); health monitoring; a high performance NVMeoF interface; non-disruptive drive upgrades, and security features such as AI-based ransomware detection. On top of this, Samsung is also exploring new storage form factors that could push densities even higher than those possible within a conventional drive form factor.

The foundations for AI innovation

As the world moves further into the era of AI, the industry must begin to accept the limitations of existing hardware. The problem is what Samsung explained as an “age of infinite data, in a world where hardware resources are finite. As the world reaches the limits of standard memory products, it is vital to explore new possibilities for memory and AI. That means working collaboratively and embracing a new pace of change. Samsung is preparing to enter a new era with products and solutions which offer rapid differentiation and exciting opportunities for the industry to innovate and grow. Samsung is committed to making this future possible, by working hard and closely with our trusted partners to develop innovative memory solutions that allow us to keep up with the pace of change and take hold of new opportunities.

www.samsung.com